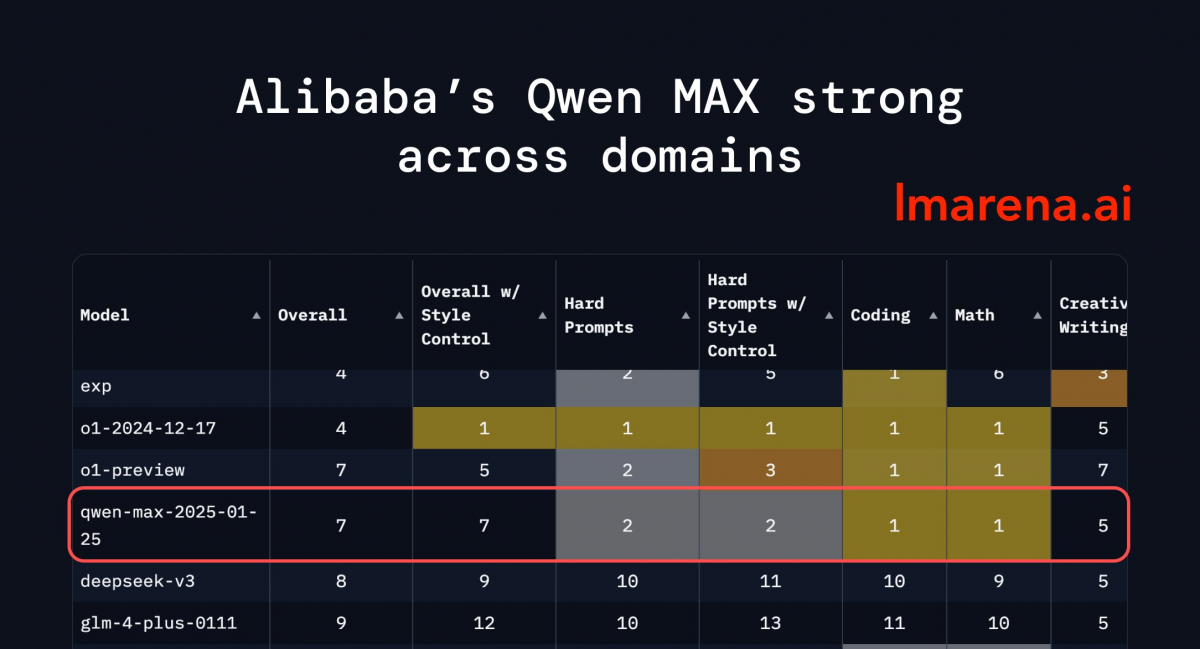

Alibaba Cloud’s latest proprietary large language model(LLM), Qwen2.5-Max, has achieved impressive results on Chatbot Arena, a well-recognized open platform that evaluates the world’s best LLM and AI chatbots. Ranked #7 overall in the Arena score, Qwen2.5-Max matches other top proprietary LLMs and demonstrates exceptional capabilities, particularly in technical domains. It ranks #1 position in math and coding, and ranks #2 in hard prompts, which involve complex prompts in addressing challenging tasks, solidifying its status as a powerhouse in tackling complex tasks.

As a cutting-edge Mixture of Experts (MoE) model, Qwen2.5-Max has been pretrained on over 20 trillion tokens and further refined with Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF) techniques. Leveraging these technological advancements, Qwen2.5-Max has demonstrated exceptional strengths in knowledge, coding, general capabilities, and human alignment, securing leading scores in major benchmarks including MMLU-Pro, LiveCodeBench, LiveBench, and Arena-Hard.

Developers and businesses worldwide can seamlessly access Qwen2.5-Max through Model Studio, Alibaba Cloud’s generative AI development platform, offering both high performance and cost-efficiently. They can also experience the model’s capability on the Qwen Chat platform.

Over the past year, Alibaba Cloud has continuously expanded the Qwen family, releasing a series of Qwen models across text, audio, and visual formats in various sizes to meet the increasing AI demands from developers and customers worldwide. Last month, it unveiled its latest open-sourced, visual-language model, Qwen2.5-VL, which exhibits remarkable multimodal capabilities and can act as a visual agent to facilitate task execution on computers and mobile devices. It also released Qwen2.5-1M, an open-source model capable of processing long context inputs of up to 1 million tokens. Earlier this year, it has unveiled an expanded suite of LLMs and AI development tools, upgraded infrastructure offerings, and new support programs for global developers during its Global Developer Summit in Jakarta.